A better core process for online research

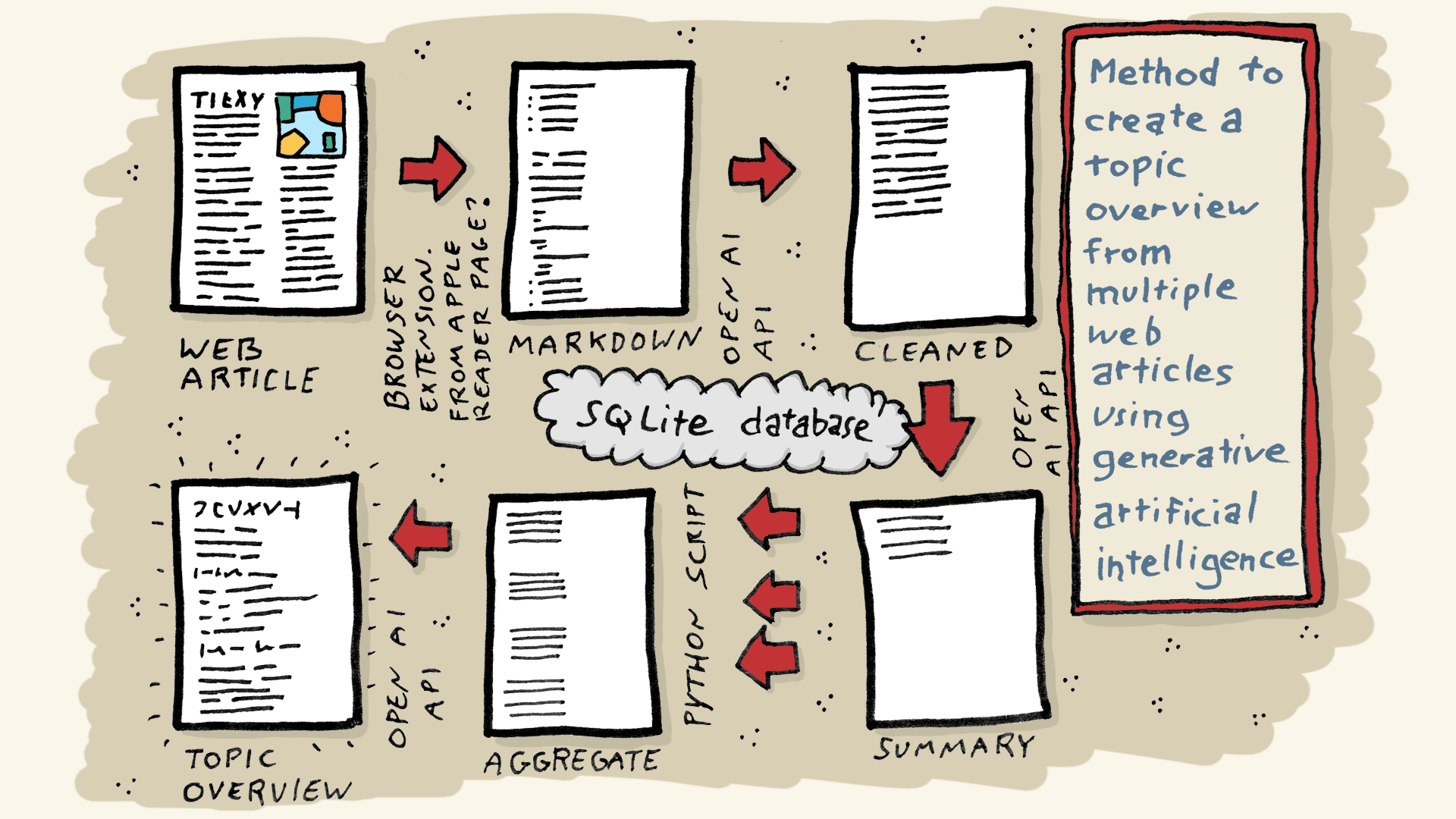

I drew this on the train thinking about my process. The actual process is a bit different, and no longer involves a SQLite database

I drew this on the train thinking about my process. The actual process is a bit different, and no longer involves a SQLite database

You can find a lot of information about my online research methods in this article: A process to semi-automate research using few-shot classification and GPT-4 summation. With this new method, I am abandoning using Raindrop as part of the process.

A summary of the new process:

- Step one is the same as before — search for relevant online articles using Kagi and various social content aggregators.

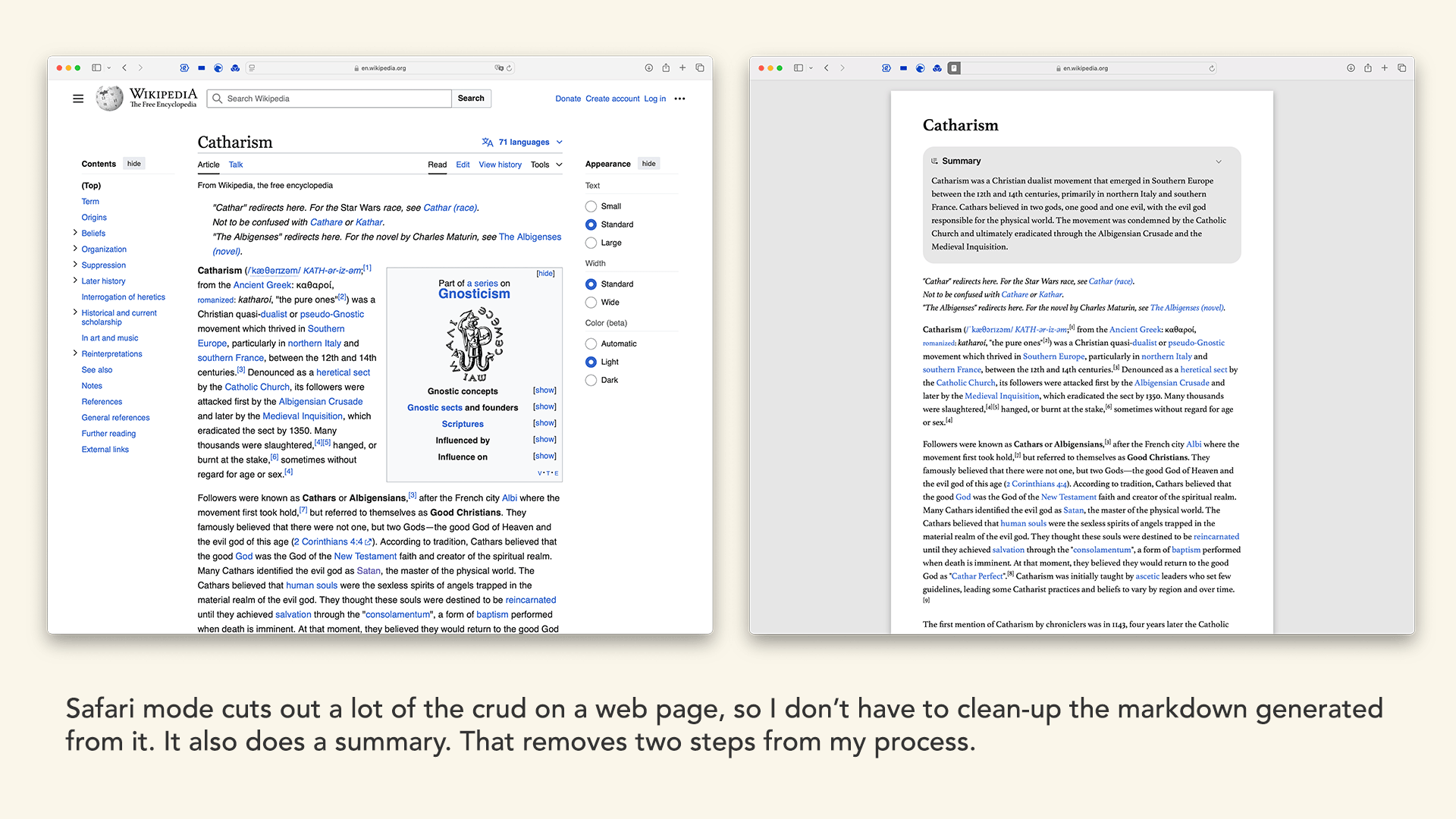

- If I find one I like, get it into Bear App. To do this I can exploit Safai’s Reader Mode to remove all the adverts and other crud on a web page. Note that Reader mode now also includes an automatic “Apple Intelligence” summary tool which I can use, eliminating another step in my previous process.

- In Bear App, keep research organised using Bear’s nested tags, in a high-level Research tag.

- Edit, highlight and add comments to the articles as I read them in Bear.

- Use the existing process I have created to convert the Research tag into a website, (perhaps at research.thisisjam.es) so that I can share my research as a Quarto website.

- In the future: add to the existing process to do stuff like automatically collate my highlights and notes, and use AI to create overall summaries and indexes.

Note there are lots of interesting things I could do with the process to make my shared notes much more useful and interesting than those shared by the Raindrop app. But of course I need to make sure I don’t share a lot of copyrighted material, so my automated processes will need to strip that out. In the meantime I could add a password to the research website so that it’s not public.