Tiro, a personal note-processing system

- Since writing this I have made a few small changes. The main one is that variables are now defined as starting with two colons, like this:

variable::value - This is so that I’m using the same syntax as the Dataview plug-in for Obsidian.

- I am going to experiment with keeping the notes for Tiro in Obsidian. Having the system compatible with Obsidian Dataview opens up a whole new world of possibilities.

Important figures in Ancient Rome had a personal record-keeper called an amanuensis. These were often higher-status slaves, often well educated Greeks fluent in multiple languages. The most famous one was Marcus Tullius Tiro, amanuensis to the great writer and orator Cicero. I’ve written before about how I would really love to have an amanuensis of my own, and my failed attempt to turn ChatGPT into one. So I’ve created my own system, and I’m calling it Tiro.

What I’m trying to do

There are lots of little bits of information I get during the day that I want to keep, but I’ve always found it difficult to do so. Sometimes the information seems to require it’s own app—for instance useful links— and I don’t like having lots of different apps and services that may close down in the future. Sometimes the information seems best held in a spreadsheet, or the information doesn’t have a clear place to store it, and ends up as a note (that I may not refer to again see my Q1 2025 quarterly reflection). So I end up with lots of places storing these little bits of information. What I need is a system where:

- I can store information with some meta-data, such as the type of information it represents, the date, the relevant project.

- I can store freeform information — little notes in markdown format.

- I can also store structured information, for example meeting times, contact information, exercise times and repetitions, expenses, web page addresses.

Ideally I would like to be able to add structured information without having to make any changes to the system, so if I think of a new thing I want to store, I can do so by literally just typing a new field name and the value.

Here are some ideas for the types of information I might want to store in this way:

- Todo An actionable task or item you need to complete.

- Idea A creative concept or innovative thought worth exploring.

- Exercise A record of physical activity or workout details.

- Event A scheduled meeting, appointment, or important date to remember.

- Contact Information about a person, including details for follow-up.

- Thought A brief personal reflection or insight capturing your state of mind.

- Quote A memorable or impactful statement that you want to remember.

- Learned A lesson or piece of new information acquired from an experience.

- Tool A resource, application, or utility that supports your work or projects.

- To-learn A question and answer for spaced repitition.

- To-read A link or reference to material queued up for reading.

- To-study A resource intended for deeper learning or in-depth research.

- Inspiration Content or ideas that spark creativity or motivation.

- Random Miscellaneous notes that don’t neatly fit into other categories.

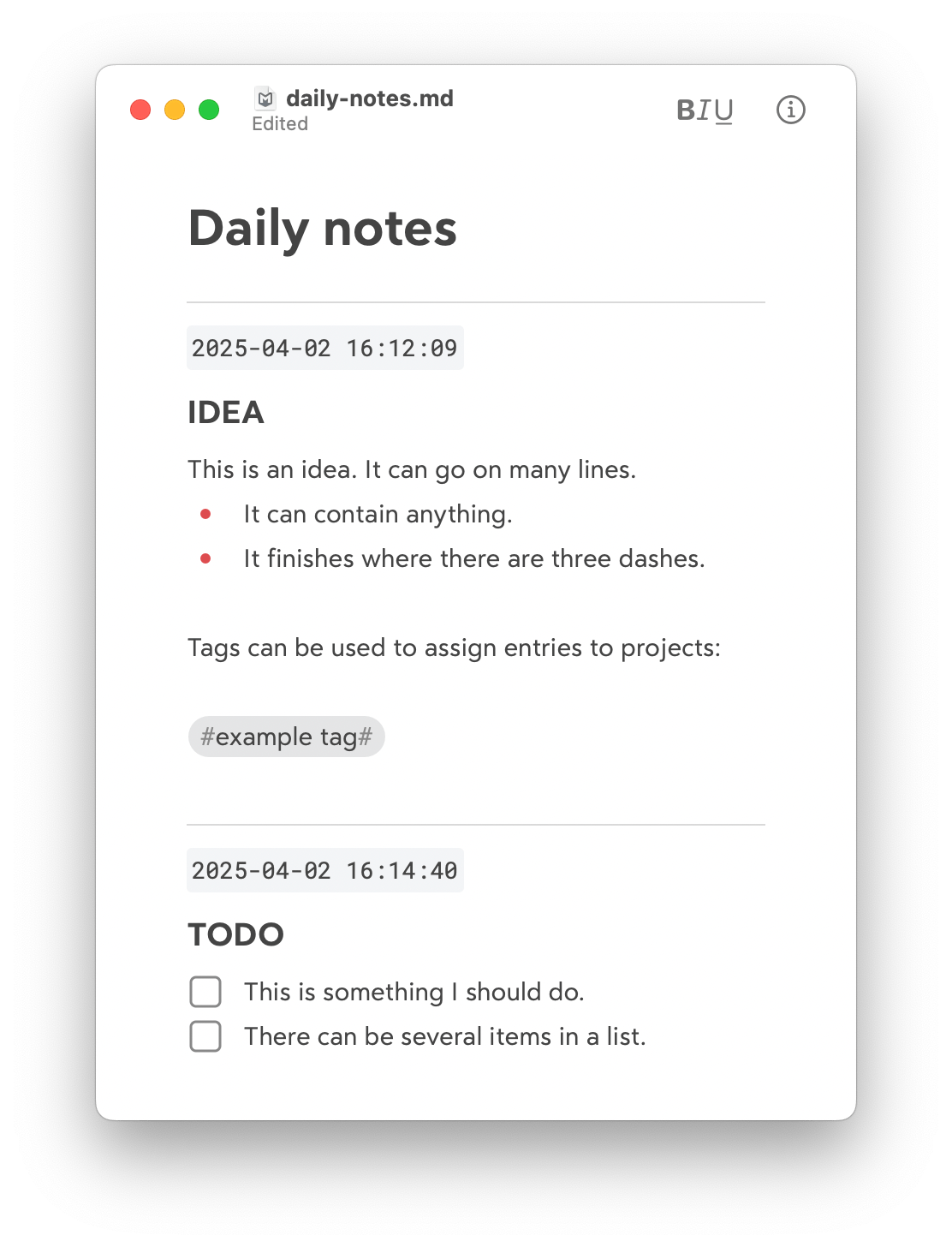

The note format

So to that end I have created a little Python script that processes a markdown file, and the markdown file has a simple structure. Each entry is separated by three dashes, followed by a date, followed by a heading which specifies the type of note, followed by the note itself. Notes can include tags—which is a line starting with a hash and optionally ending with a hash— and variables which just start with a variable name, a colon and a space. Let’s take a look at an example file:

# Daily notes

---

`2025-04-02 16:12:09`

### IDEA

This is an idea. It can go on many lines.

* It can contain anything.

* It finishes where there are three dashes.

Tags can be used to assign entries to projects:

#example tag#

---

`2025-04-02 16:14:40`

### TODO

- [ ] This is something I should do.

- [ ] There can be several items in a list.

---

`2025-04-02 16:15:22`

### THOUGHT

This is a thought. It’s just unstructured text.

---

`2025-04-02 16:33:38`

### EXERCISE

rowing: 2k, 8:20

press-ups: 20,20,20

pull-ups: 10,12,12

This is a note

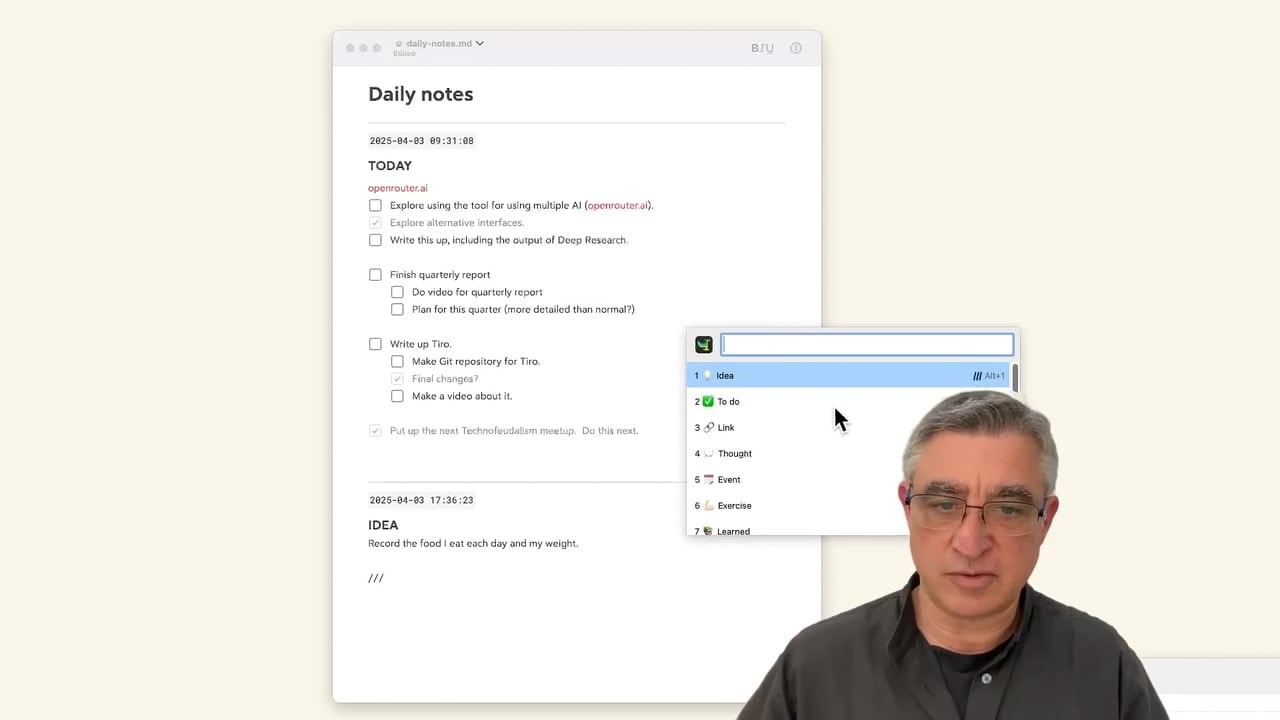

---You could use any markdown editor to edit the file. I personally use Panda, which is a stand-alone markdown editor from the makers of Bear App, which I use to manage my website and a bunch of other stuff. I also use the wonderful Espanso text expander app to automatically generate the date and basic format for entries.

This is what the above looks like in Panda:

I keep that window open all day and just keep adding notes to it.

The generated table

So that’s the simple format. The processing script does a simple thing: it just takes the note and converts the entries into a SQLite table, generating new columns when it comes across new variables. That’s it! Let’s see what that looks like in the SQLite table:

| id | datetime | iso_datetime | type | raw_entry | tags | exercise_rowing | exercise_press_ups | exercise_pull_ups | url |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1743603129 | 2025-04-02 16:12:09 | idea | This is an idea. It can go on many lines. * It can contain anything. * It finishes where there are three dashes. Tags can be used to assign entries to projects |

example tag | ||||

| 2 | 1743603280 | 2025-04-02 16:14:40 | todo | - [ ] This is something I should do. - [ ] There can be several items in a list. |

|||||

| 3 | 1743603322 | 2025-04-02 16:15:22 | thought | This is a thought. It’s just unstructred text. | |||||

| 4 | 1743604418 | 2025-04-02 16:33:38 | exercise | This is a note | 2k, 8:20 | 20,20,20 | 10,12,12 |

The columns like exercise_rowing were all generated automatically, by combining the entry type with the variable name.

So you might ask, what’s the point of this? The idea is that over time the database builds up and then you can start doing interesting things with the data — generating graphs, categorizing notes and ideas automatically using gen AI, using URLs as to feed into scripts that do research or summation, etc. So the table is just the data in a structured format that can be processed with other scripts.

The code

So this is the code I generated with o3-mini-high for my personal use case. It’s less than 200 lines and would be easy to modify if you want to do things differently to me, or if your markdown editor has its own idiosyncrasies.

#!/usr/bin/env python3

import sqlite3

import logging

from datetime import datetime

import re

def main():

# Configure logging to write to a file with timestamps

logging.basicConfig(

filename='process-notes.log',

level=logging.INFO,

format='%(asctime)s %(levelname)s: %(message)s',

datefmt='%Y-%m-%d %H:%M:%S'

)

md_file = "daily-notes.md"

db_file = "tiro.db"

# Read the markdown file

try:

with open(md_file, "r", encoding="utf-8") as f:

content = f.read()

except Exception as e:

logging.error(f"Error reading file {md_file}: {e}")

return

# Split content by delineator '---' and trim entries

entries = [entry.strip() for entry in content.split('---') if entry.strip()]

# Ignore the first and last entries

if len(entries) > 2:

entries = entries[1:-1]

else:

logging.error("Not enough entries to process after ignoring the first and last entries.")

# Connect to the SQLite database (creates tiro.db if it doesn't exist)

conn = sqlite3.connect(db_file)

cursor = conn.cursor()

# Create the table if it doesn't exist.

# A new "id" column is added as the auto-incrementing primary key.

# The "datetime" column is now UNIQUE to prevent duplicate entries.

cursor.execute('''

CREATE TABLE IF NOT EXISTS daily_notes (

id INTEGER PRIMARY KEY AUTOINCREMENT,

datetime TEXT NOT NULL UNIQUE,

iso_datetime TEXT NOT NULL,

type TEXT NOT NULL,

raw_entry TEXT NOT NULL

)

''')

# Get the current columns in the table for later reference

cursor.execute("PRAGMA table_info(daily_notes)")

existing_columns = {row[1] for row in cursor.fetchall()}

# Ensure iso_datetime column exists

if "iso_datetime" not in existing_columns:

try:

cursor.execute('ALTER TABLE daily_notes ADD COLUMN iso_datetime TEXT')

existing_columns.add("iso_datetime")

logging.info('Added missing column "iso_datetime" to the table.')

except Exception as e:

logging.error(f'Error adding column "iso_datetime": {e}')

inserted_count = 0

# Compile regex patterns for variable definitions and tag lines.

variable_pattern = re.compile(r'^(\S+):\s+(.*)$')

# Tag pattern: either a single word tag (#example) OR a tag with spaces that MUST end with a hash (#example with spaces#)

tag_pattern = re.compile(r'^(?:#(\S+)$|#(.+\s.+)#$)')

for entry in entries:

lines = entry.splitlines()

if len(lines) < 2:

logging.info("Skipping entry due to insufficient lines.")

continue

# Process the datetime from the first line (enclosed in backticks)

dt_line = lines[0].strip()

if dt_line.startswith('`') and dt_line.endswith('`'):

dt_str = dt_line.strip('`')

else:

logging.info("Skipping entry: datetime line is not enclosed in backticks.")

continue

try:

dt_obj = datetime.strptime(dt_str, "%Y-%m-%d %H:%M:%S")

# Convert datetime to Unix timestamp (as a string)

unix_timestamp = str(int(dt_obj.timestamp()))

except Exception as e:

logging.error(f"Error parsing datetime '{dt_str}': {e}")

continue

# Check if an entry with this timestamp already exists (using the UNIQUE datetime column)

cursor.execute('SELECT 1 FROM daily_notes WHERE datetime=?', (unix_timestamp,))

if cursor.fetchone():

logging.info(f"Entry with datetime {dt_str} (timestamp {unix_timestamp}) already exists. Skipping.")

continue

# Process the note type from the second line, stripping leading '#' and spaces,

# converting to lowercase and replacing spaces with underscores.

type_line = lines[1].strip()

note_type = type_line.lstrip('# ').strip().lower().replace(" ", "_")

variables = {}

raw_lines = []

urls = []

tags_list = []

# Process remaining lines for tags, variable definitions, and URL lines.

for line in lines[2:]:

line_stripped = line.strip()

# Check for tag lines: they must be on their own line.

match_tag = tag_pattern.match(line_stripped)

if match_tag:

# If it's a single-word tag, group(1) is used; otherwise, group(2) holds the tag with spaces.

tag = match_tag.group(1) if match_tag.group(1) is not None else match_tag.group(2)

tags_list.append(tag)

continue

# Process variable definitions (e.g. "rowing: 2k, 8:20")

match_var = variable_pattern.match(line_stripped)

if match_var:

base_var = match_var.group(1).lower().replace('-', '_')

new_var = f"{note_type}_{base_var}"

var_value = match_var.group(2).strip()

variables[new_var] = var_value

continue

# Process URL lines: any line starting with "http"

if line_stripped.lower().startswith("http"):

urls.append(line_stripped)

continue

# For all other lines, add them to raw_lines

raw_lines.append(line)

raw_entry = "\n".join(raw_lines).strip()

# If any tags were found, join them with commas and store in a dedicated variable "tags"

if tags_list:

variables["tags"] = ", ".join(tags_list)

# If any URLs were found, store them in the "url" variable

if urls:

variables["url"] = ", ".join(urls)

# Dynamically add any new columns (for variables) if not already present.

for var in variables:

if var not in existing_columns:

alter_query = f'ALTER TABLE daily_notes ADD COLUMN {var} TEXT'

try:

cursor.execute(alter_query)

existing_columns.add(var)

logging.info(f"Added new column '{var}' to the table.")

except Exception as e:

logging.error(f"Error adding column '{var}': {e}")

# Prepare the list of columns and corresponding values to insert.

# Note: The "id" column is auto-generated, so we don't insert it manually.

insert_columns = ["datetime", "iso_datetime", "type", "raw_entry"] + list(variables.keys())

insert_values = [unix_timestamp, dt_str, note_type, raw_entry] + list(variables.values())

placeholders = ', '.join(['?'] * len(insert_columns))

columns_str = ', '.join(insert_columns)

try:

cursor.execute(f'''

INSERT INTO daily_notes ({columns_str})

VALUES ({placeholders})

''', insert_values)

inserted_count += 1

logging.info(f"Inserted entry with datetime {dt_str} (timestamp {unix_timestamp}).")

except Exception as e:

logging.error(f"Error inserting entry with datetime {dt_str}: {e}")

conn.commit()

conn.close()

logging.info(f"Processed {len(entries)} entries. Inserted {inserted_count} new entries into {db_file}.")

if __name__ == "__main__":

main()

Next steps

So now I need to start keeping notes in this new semi-structured format for a few months and then I can start the really fun part—processing the collected information into useful formats, charts and graphs. I am really looking forward to that.

Below is a specification I got ChatGPT to write based on the code.

Tiro specification

Tiro is a personal note-processing system designed for fast, structured capture and organization of daily notes. Tiro allows you to record diverse note types in plain Markdown. The system then parses these entries and stores them in a dynamic SQLite database for further analysis (e.g., graphing exercise performance or categorizing bookmarks).

Input Format

Source File

- Filename: daily-notes.md

- Format: Markdown with note entries separated by —.

⠀Note Structure Each entry must include: * Timestamp Line: Enclosed in backticks, e.g. ### 2025-04-02 16:33:38 * Note Type Header: A header line indicating the entry type, e.g. ### ### EXERCISE * Body Content: Contains freeform text that may include: * Variable Definitions: Lines in the format key: value (e.g., rowing: 2k, 8:20). * Tag Lines: Lines starting with a single hash (e.g., #milton). Note: Tags must be on their own line; hashes in the middle of lines are ignored. * URL Lines: Lines starting with “http” or “https”. * Other Text: Any other content that will be stored as the raw note body.

⠀Example Entry

---

`2025-04-02 16:33:38`

### EXERCISE

rowing: 2k, 8:20

press-ups: 20,20,20

#milton

https://example.com/workout

Felt strong today.Script Behavior

Parsing and Processing

1 File Reading & Splitting

- The script reads daily-notes.md and splits its content by the delimiter —.

- It trims each entry and ignores the first and last entries if they are not valid.

2 Timestamp Extraction

- The first line (enclosed in backticks) is parsed into a timestamp.

- Two representations are stored:

- datetime: The Unix timestamp (as a string) used for deduplication and as a unique key.

- iso_datetime: The original human-readable timestamp.

3 Note Type Extraction

- The second line is processed to extract the note type.

- The type is normalized (lowercase with underscores replacing spaces).

4 Body Content Processing

The remaining lines of each entry are processed to extract:

- Variable Definitions: Lines matching the pattern key: value are captured. The variable name is prefixed with the note type (e.g., exercise_press_ups).

- Tag Extraction: Lines that begin with a single hash (e.g., #milton) are captured as tags.

- Multiple tag lines are combined into a comma-separated list and stored in a dedicated tags column.

- Tag lines are removed from the raw note text.

- URL Extraction: Lines starting with “http” are identified and stored in a url variable.

- Raw Entry: All other lines are combined to form the raw_entry field.

5 Dynamic Schema Evolution

- For each extracted variable (including url and tags), if the corresponding column does not exist in the database, the script automatically alters the table to add that column.

- Columns are named using the composite format (e.g., exercise_press_ups), except for the special tags column, which is stored without any type prefix.

6 Deduplication

- Before insertion, the script checks whether an entry with the same Unix timestamp already exists to prevent duplicate records.

Database Schema

- Database File: tiro.db

- Table Name: daily_notes

- Columns:

- id: Auto-incrementing primary key (INTEGER PRIMARY KEY AUTOINCREMENT)

- datetime: Unix timestamp (TEXT, marked as UNIQUE)

- iso_datetime: Human-readable timestamp (TEXT)

- type: Note type (TEXT)

- raw_entry: Raw body content of the note (TEXT)

- Dynamic Columns: Created on-the-fly for variables such as:

- exercise_press_ups

- url

- tags

- …and any other keys defined in the note body.

Logging

- Logs are written to process-notes.log.

- Logs capture:

- File read errors

- Entry parsing and processing details

- New column additions

- Duplicate entries and insertion outcomes

⠀ ## Extensibility & Future Enhancements

- Link Enrichment: Automatically retrieve metadata (titles, descriptions) for URLs.

- Tagging Improvements: Advanced tag search and filtering.

- Data Analysis: Integration with tools (e.g., Pandas, Jupyter) for generating graphs and reports (e.g., exercise trends).

- Export Options: Options to export data as CSV, JSON, or HTML.

- Synchronization: Future use of the id column for cross-device syncing or additional row-level operations.

Usage Workflow

1 Note Capture: Use a Markdown file (daily-notes.md) to write entries using the prescribed format. 2 Processing: Run the process-notes.py script to parse the file and update the tiro.db SQLite database. 3 Analysis & Reporting: Use secondary scripts to query the database for insights, visualization, and further processing.